Article for February: All forms of writing

Hi everyone!

For February we chose to read Greg Downey's critique of Stanislas Dehaene's 2009 book Reading in the Brain. In his article titled All Forms of Writing (Mind & Language 2014), Greg Downey discusses Dehaene's neural efficiency-based interpretation of evolution of writing and his negligence of anthropological evidence, such as environmental, technological, social and historical factors which play a significant role in the development of writing systems. In order to illustrate the problem of Dehaene 's "…simple account of cultural evolution, driven inexorably by neurological imperative toward alphabetic writing unless impeded" (p. 316), Downey cites the example of independent pre-Columbian writing systems, but he also mentions recent "unusual" case of emerging orthographic changes caused by technological advancements (computer-mediated communication) such as use of acronyms, homophones and emoticons. Downey stresses the importance of a true cooperation between cognitive scientists and anthropologists, believing it to be the only way to avoid both radical cognitive universalism and cultural relativism in studying cultural evolution.

What are your thoughts on the relationship between cognitive science and cultural anthropology in the study of cultural evolution? And what do you think of Downey's opinion on writing and reading being the perfect empirical case to demonstrate that "…the old, single-sided perspectives (…) are inadequate to the task" (p.318)?

Olivier Morin 15 February 2017 (18:54)

I enjoyed reading Downey's paper, and agree with his basic point, namely, not everything that matters to culture is cognitive or neural; most often brains are simply one system where multiple causalities play out. I'm not sure, however, that there is any interesting prediction to derive from this idea, or any meaningful disagreement to be had over this. (I don't see what prevents the targets of Downey's criticisms from just replying, "Yes, so what?"). On the other hand I agree that when discussing cognitive constraints on writing we should be very careful about the non-independence of data points and keep in mind that what works for printed characters may not work for other types. I try to do that in my own work, as much as possible. Also, I share Downey's skepticism with directional narratives (the super-highway to the alphabet) in the evolution of writing, but it's worth pointing out that the old teleological package is not an innovation from cognitive scientists: in fact Dehaene mostly takes his cue from standard grammatology on these topics.

James Winters 17 February 2017 (10:17)

With Downey's paper, I found myself learning something new on almost every paragraph, which made for a really enjoyable read. But I want to pick up on two points he made regarding the variation in writing systems and the presence of faces in Mayan scripts.

The first point is that Downey seems to conflate patterns of structure with constraints on the space of possible structures. Patterns of structure, as Downey rightly points out, are subject to various cognitive, cultural and ecological factors (writing systems show a considerable amount of variation, and this variation is due to multiple forces acting on the system). But the central thesis of Dehaene's book is that writing systems are constrained by the organisation of brain circuits that we recycle as we acquire literacy. In short, our cognitive capacities set bounds on the space of possible writing systems, with some structures being more efficient to process than others. I think Dehaene would readily acknowledge that exploration of the space, in terms of the variation we observe in the structure of writing, is contingent on other factors (some of which might push against a pressure for a more efficient system).

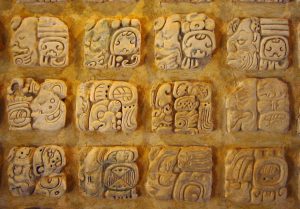

My second point is about the presence of faces in Mayan glyphs. Since the publication of Reading in the brain, Dehaene has gone on to show (see here and here) that words and faces actually compete for the same cortical terroritory in illiterate individuals, suggesting that: (a) face shapes are efficient stimulus for reading circuitry, and (b) the Mayan writing system might end up supporting the neuronal recycling hypothesis.

Barbara Pavlek 17 February 2017 (14:32)

I liked Downey's call for cooperation and acknowledging the importance of details as well as "big picture" in a study of cultural evolution. But it seems to me, as a rookie in the field, that by now the cognitive scientists and anthropologists manage to work well in interdisciplinary areas and both try to avoid the extremes and find a middle ground, in order to further advance the field.

What I found most interesting in this article are Downey's examples of different graphic communication systems, of which some, like Braille and the popular emoticons, are not language based, and do not encode spoken language, but are still used for graphic communication. I remembered the recent SF film Arrival, based on Ted Chiang's short story we read together last year. The story describes the arrival of aliens on Earth and struggles of a physicist and a linguist to communicate with them. As it turns out, the aliens have two completely different systems for "spoken" and "written" communication, and their "written" language consists of weird graphics, has no concept of time, and demands highly complex cognitive processes to be "read".

All the different factors that Downy lists, environmental, social, technological and historical, shape human cultures in different ways – some cultures develop graphic codes, some then use those codes for communication, but there are only two we call writing. Are the criteria we have for defining writing as opposed to "non-language-encoding graphic codes" too harsh? If we disagree with Dehaene's linear evolutionary path of writing towards alphabet, should we make writing definition more inclusive?

Thomas Müller 24 February 2017 (17:53)

All forms of writing

I agree with the general point of the paper and your comments and feel that I don't have much to add. Indeed, a sole emphasis on cognitive universals is a short-sighted view on the development of such a complex and multidetermined system as writing, as is ignorance of the cognitive constraints that shape it. There is an easily found middle ground between the extreme positions, as already outlined by James.

What I liked most about the article is that it makes us reconsider the very definition of writing and whether there actually is a strong contrast to graphic codes that don't encode spoken language (see Barbara's comment). Maybe it's the limitation in scope that sets these systems apart?

Piers Kelly 27 February 2017 (14:51)

????

As other commentors have already pointed out, I think the dispute between these two scholars comes down to the relative prominence of universal neurobiological constraints over situated cultural-historical ones when it comes to understanding reading and writing. I am emphatically not proposing the one set of constraints is more important than the other, but neither, I think, is Downey. "To understand the evolution of writing systems," he writes, "one need take account of political events as much as the configuration of the brain regions adapted to reading." As a card-carrying neuroanthropologist I think Downey is well placed to comment on this.

I concur with Olivier that there may not be an interesting disagreement or prediction to be had here, but I would hazard a reply to the "So what?" question: if we see ourselves as being in the business of social science it pays to be very clear whenever we presume to untangle any of these constraints (not to mention the independence of data points). Dehaene's naivety about the social contexts of graphic communication may not matter to his larger argument, but along the way he makes a number of careless claims that threaten his general point. Most annoying is his revival of a kind of teleological fallacy that all writing is straining towards the alphabetic principle. He seems disappointed that the Egyptians and Sumerians didn't invent an alphabet and attributes it to their failure to make "rational decisions", and of following the "natural slope of increasing complexity".

I would prefer to assume that such significant cultural and technological decisions are going to be meaningful in their historical context, even if they don't conform to our commonsense intuitions. And with Dehaene himself I would also reject the idea that increasing complexity is a "natural slope" – indeed, the processes of conventionalisation/simplification that he describes throughout his book seem rather more "natural" to me. Dehaene likewise makes a case that mixed writing systems that use "fragments of both sound and meaning" are a stable attractor and to be "the best solution". I kind of agree, but I also struggle to think of a system that doesn't fit this definition. And curiously, his much-denigrated Egyptian hieroglyphics is canonically 'mixed', having graphemes that represent sound as well as semantic determiners to distinguish homophones. Now there's a rational decision for you!