Epistemic vigilance… and epistemic recklessness

We have all enjoyed, if that is the right word, conversations with people who seem to have no great regard for the niceties of argument and evidence - people who tell you that homeopathy does work because it cured them of a common cold, in a few days… Or that the FBI (or other such agencies) deliberately created the AIDS virus (or crack cocaine) to destroy Africans (or black Americans)… In many cases, such epistemic lapses are context-specific - the same person who claims that homeopathy does work will insist on proper evidence when buying a dishwasher or deciding on a school for their children.

A recent book called Panic Virus [1] by Seth Mnookin details the extraordinary story of the “vaccinations cause autism” meme. This started with some inconclusive but over-reported studies by a few marginal scientists, and soon ballooned up into a huge social movement, where thousands of distressed parents could exchange information, share their traumatic experience, and read or listen to many (some naive, some downright mendacious) “scientists” promoting wild theories (autism from vaccines, from the preservatives used in the vaccines, from radiation, from lack of vitamins, etc.) and often peddling expensive, untested and dangerous treatments (like painful testosterone injections).

As Mnookin relates, the movement soon acquired many characteristics of a cult...

Specially troubling is the acute paranoia that seems to pervade the online and physical meetings. Most webpages and presentations depict the “establishment” scientists as insensitive if not corrupt (being “shills” of the vaccination industry) and the fringe doctors as heroic mavericks in touch with parents’ and children’s experiences. Websites describe in detail the “obvious” collusion between the (US) government, Big Pharma and the American Pediatrics Association. Dissenters are not welcome. Even a neutral observer like Mnookin has great difficulty just interviewing some of the participants or being admitted to (officially public) meetings.

There are many other examples of such beliefs. I chose this because, contrary to standard cases (superstition, gods and ancestors, etc.), it is difficult here to make fun of the believers, if only from a sense of human decency.

The epistemic perspective

In circumstances like these, it would seem that people have shifted from their usual, highly adaptive, default-value epistemic vigilance to some form of epistemic recklessness. How does that happen?

Standard cognitive psychology has several answers. First, the epistemic equipment just isn’t up to par. Humans minds are full of unjustifiable biases and unreliable heuristics. Second, the equipment may misfire because of performance issues – people are inattentive, tired, drunk, etc. Third, people’s reason may be over-ridden by emotional processes. In this view, there are many beliefs that we hold because we want to hold them.

Our sophisticated readership will have noticed that these are not very good explanations. Indeed, they are terrible.

Orthogonal to all this, a traditional question in cognition and culture is how cognitive systems create both evidence-based, flat-footed commitment to verifiable beliefs on the one hand, and free-wheeling, brazen gullibility on the other. For instance, we know that many statements of belief are evidence for reflective beliefs - that when people say “bulls are cucumbers”, their mental state is “[It is true that] bulls are cucumbers”, or some such meta-representational frame.

This epistemic perspective starts from the assumption that cognitive system, on the whole, evolved to build useful and truthful representations of the environment. It then argues that occasional useless beliefs pose no great threat to that main epistemic function.

A non-epistemic perspective - communication for alignment

But we could take another perspective, one I would call strategic.

In this view one can distinguish between two (partly overlapping) categories of communication events (to simplify, I am only considering situations where some person A conveys to B that x is the case):

1. Fitness-from-facts comunication events. Here people provide and seek information that may provide benefits to self or others. Communication makes it possible to pool information and experience for mutual benefits - and occasionally for exploitation as well.

2. Fitness-from-coalitional-knowledge events. Here people spread or seek information whose accuracy may be fitness-neutral, but such that knowing people’s attitude to it (they believe it, they deny it, they are indifferent, skeptical, mocking, etc.) provides information about their coalitional alignment vis-a-vis the speaker.

Rumors like the ones mentioned at the beginning of this post (the CIA created AIDS, clothes stores in Orleans are a cover for white-slavery, etc.) provide a good example of this information-for-alignment situation. To simplify a great deal, when people tell me that crack cocaine was designed by the government to kill blacks, [a] they are saying nothing of great direct fitness value to us (we both knew crack was bad beforehand, we were not tempted to try - nothing has changed), but they are also receiving information about our coalitional alignment. If I scoff at the idea or call it an urban legend, I am definitely “out”. If I say that it is indeed terrible and scary, I am in some sense aligning myself with the speaker’s coalition (not that I or she necessarily know what specific coalition is at stake here).

Gossip is a bit more complicated, as it may combine both (1) and (2) processes. If you tell me that the boss is having an affair with her assistant, this may be directly fiteness-relevant knowledge (I have better information about someone who may help/hurt me). This may also reveal that you and I are in the same clique inside the organization.

So here is the general proposal: In many contexts, humans seek and spread information that reveals or triggers coalitional alignment. They detect that some information is, as politicians would put it, “polarizing”. They orient to that information, they spread it, they seek other people’s reactions to it.

If this is true, the adaptive value of the process is non-epistemic, so to speak. It does not lie in the referential accuracy of the statements communicated, but in the indirect clues it provides concerning other people’s attitudes.

(Some versions of this were proposed by others, including Tocqueville, Robert Kurzban [2], Dan Sperber, and, inevitably, John Tooby and Leda Cosmides).

Explaining motivation

The main advantage of the strategic view, as a complement to the traditional epistemic perspective, is that it could account for the obvious and important motivational aspects of these forms of communication. People who believe that crack cocaine is a conspiracy don’t just hold that belief. They want to share it, they want to tell many people but specially their friends and acquaintances. Also, people are highly interested in other people’s reactions. They are easily angered by skepticism, and often find that co-believers are sound, good people.

Social scientists have often pointed out that some issues are “polarizing”. But the standard assumption is that [a] people have the beliefs and when they communicate their beliefs, conflict occurs because others have just as strongly held contrary beliefs. The present proposal is that, to some extent, people express these beliefs (and to some extent hold them) because they will have that effect.

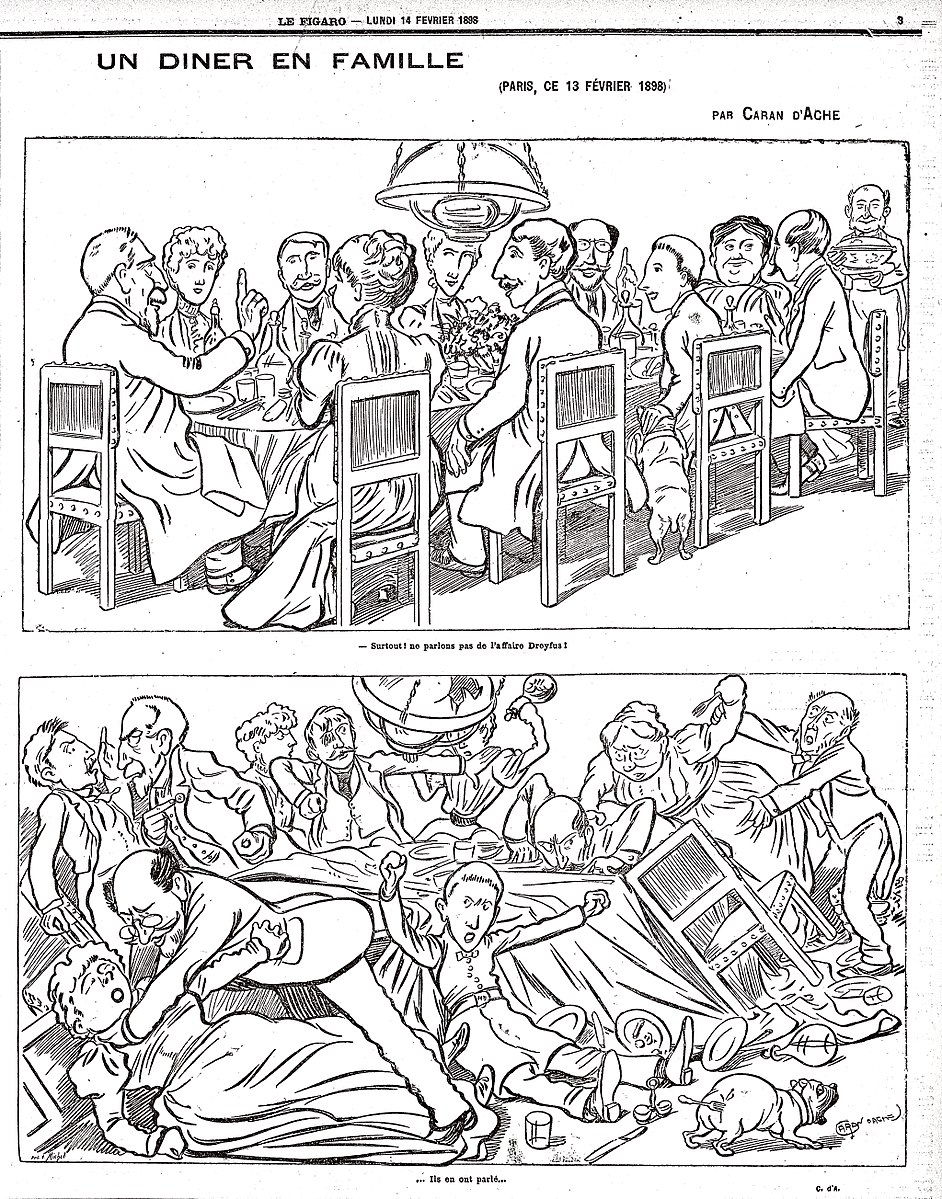

This Caran d’Ache cartoon about the Dreyfus affair, A family dinner, is famous in France.

This Caran d’Ache cartoon about the Dreyfus affair, A family dinner, is famous in France.

Top panel: “Above all, let’s not talk about the Affair”.

Bottom panel: “They did talk about it…”.

Source: Wikimedia Commons

Thanks to Coralie Chevallier and Nicolas Baumard, who helped me frame these ideas and to John Christner for ongoing conversations on the topic.

[1] Mnookin, S. (2011). The panic virus: a true story of medicine, science, and fear. Simon and Schuster.

[2] Kurzban, R. (2007). Representational Epidemiology. The evolution of mind: Fundamental questions and controversies, 357.

Hugo Mercier 8 August 2011 (13:53)

Thanks for a great post Pascal! I've started to think about these issues lately, it's quite fascinating (besides conspiracy theories, it might also explain quite a few political beliefs). A detail. It seems that in many cases, a communication event could belong to both of your categories. Maybe it would be more appropriate to cast that in terms of different psychological mechanisms that are more or less used when a statement is being evaluated.

Konrad Talmont-Kaminski 9 August 2011 (00:10)

I really like the way you have put this point here. Fitness-neutrality is of course vital as fitness-enhancing beliefs will be selected on the basis of their usefulness - if one flag was objectively better than others, flags could not be used to determine allegiance. Still, the epistemic perspective does not go away. People who spread the coalitional beliefs still have to feel that there is good reason to accept those claims as true. So, the question becomes - How are the normal epistemic processes sidelined or recruited in these cases? This is the basic point I've been exploring in my own research, the main focus of it being the ways in which such beliefs have to be protected against what might be perceived as counterevidence.

Emma Cohen 9 August 2011 (08:12)

This helps me make sense of something I’ve never really understood. For almost every belief (and perhaps we can include preferences too?), there is often a corresponding social category term – so if I tell you I believe in Muhammad, you could be forgiven for readily paraphrasing that as “she’s a Muslim”; if I avoid eating meat, I’m a vegetarian; if I have a sexual preference for women, I’m a lesbian, etc. etc. It’s interesting to consider what happens when the communicator’s intent is primarily about the content of the belief/preference, but the interlocutor reframes the message communicated in terms of coalitional alignment. That these phenomena are distinct is suggested by a range of reactions: e.g., surprise “Huh! I guess that does make me an ‘X’ [e.g. liberal/conservative/agnostic, etc.)”, resistance to being “tarred with the same brush” (e.g., “I’m not like all the other Xs"), or even revision of beliefs (e.g., “Well, if all those people are Xs too, I’m out!” – recall recent events in the Catholic church). What has often puzzled me is the resistance belief-holders, including myself, can have to such labels. It’s not just discomfort about being stereotyped, but resistance to what feels like an irrelevant inference (about coalitional alignment rather than belief, or about general social identity or character). On discovering I’m from Northern Ireland, people often ask whether I’m a Catholic or a Protestant. I find it almost impossible to give a one-word answer. My inferential machinery is probably trying to figure out whether the person is interested in my coalitional alignment (and, I guess, potentially what fitness-relevant consequences that could have) or my theological take on the papacy, or whether they’re not particularly interested at all but it’s the only conversation topic that springs to mind at the time. Depending on their information, they might think that “Northern Irish Protestant/Catholic” is a synonym for nasty bigot, queen/pope-hugger, bomb-making expert… In any case, I get uncomfortable about having to make a declaration so early in an acquaintance over something that I consider quite irrelevant to my social identity/epistemic beliefs (but that could be a big deal for [i]them[/i]). Likewise, it’s always seemed perfectly natural for someone to call me a “cognitivist” on discovering that I work in the cognition and culture area. I just know that I don’t like it much, especially if I’m not sure that the interlocutor understands what my cognitive approach to culture is, and is keen to clarify that she is [i]not[/i] a cognitivist. This often results in attempts to clarify my position and to get back to the epistemic issues that were so swiftly derailed by what look suspiciously like coalitional concerns. For example, I might say something to the effect that it’s the method/question/etc etc that is relevant (not whether I am or am not one of those “cognitivist” folks). Pascal’s framing of these issues suggests to me that, in so doing, I’m perhaps not so much motivated to sell my epistemology, but rather to establish common ground. Makes me think that next time, I should probably just pick a less controversial topic (favourite ice-cream, colour, sporting hero…). On a related and more general note, perhaps the coalition-alignment side of belief-adherence actually blurs some lines between belief and preference. Just what is someone saying when they gushingly tell you they [i]just loved[/i] your latest paper? Or that they have a soft spot for the scientific method? Here, perhaps (apparent) epistemic alignment subserves social affiliation. By the same token, friendships are often severed over differences of (epistemic) belief. If I like you and am in your gang, all else being equal, I should demonstrate a preference for your beliefs. In fact, when all else is not equal (e.g. the evidence is stacked against your belief), my loyal and potentially costly adherence (or seeming adherence) to the belief can operate as an even more effective signal of affiliation. A reason, of course, why coalitions in science aren’t always a good thing but blind review is… (N.B. For the record, I’m a carnivorous, non-Muslim, heterosexual, cognitivist. I’d rather that self-definition didn’t go on my gravestone, though.)

Hugo Mercier 11 August 2011 (20:07)

It seems as if maybe you're skipping an interesting distinction. You say that "For almost every belief (and perhaps we can include preferences too?), there is often a corresponding social category term." Yet it seems to me that the immense majority of our beliefs or preferences do not result in social category terms (I believe my name is Hugo, and I have grown to quite like it, but I don't think that puts me in a special category (ok, maybe narcissistic...)). It may mostly be the beliefs transmitted through the second type of communicative act/cognitive mechanisms that Pascal mentions that are prone to yielding social categorization. The fact that we first (or even only) think of these beliefs and preferences when we think about beliefs and preferences would then be obviously related to their social nature: they are often the most relevant beliefs to communicate.

Emma Cohen 12 August 2011 (16:30)

I agree - Hugo is a very likeable name ;) You're absolutely right - that statement needed some qualifying, and quite possibly along the lines you suggest. The conditions under which categorization happens presumably have something to do with relevant ways of carving up the social world, in terms of fitness and/or cognitive processing efficiency. There are few fitness benefits or predictions/inferences to be derived from my viewing Hugos who believe their name is Hugo as a special category of person. Hugos who believe their name is Jesus could be more interesting, but, as far as I know, is too uncommon to be of any use. Likewise for the case of people who [i]like[/i] the name Hugo - as compared with people who like the name Hitler, for example… In any case, though an important qualification, I think this is tangential to the rest of that post, which (among other rambling thoughts) was about what happens when someone communicates a belief in the “facts mode” and this activates a coalitional-alignment reading by the listener (normally from a pre-existing category, such as Muslim, lesbian, vegetarian, etc.) rather than a (desired) response about the belief per se. So (maybe a rubbish example, but….): Communicator A: “I believe Andy Murray will win Wimbledon 2012 if he keeps improving mental focus” Respondent B (coalition-based): “You Murray fans are a bunch of dreamers!” [i]versus[/i] Respondent C (facts-based): I believe Murray can win if he eats a deep fried Mars Bar before every match.