Why is misinformation so sticky?

When my mother was warning me against talking to strangers in the street, she might have had not only security concerns, but also epistemological ones. Misinformation, whichis so widespread in contemporary societies, is sticky! According to Stephan Lewandowsky, Ullrich K. H. Ecker, Colleen Seifert, Norbert Schwarz and John Cook, the authors of an excellent survey article on the topic, “Misinformation and its Correction: Continued Influence and Successful Debiasing”, just published in Psychological Science in the Public Interest, once you start believing bogus information it becomes very difficult to correct, even when you are told that it was bogus.

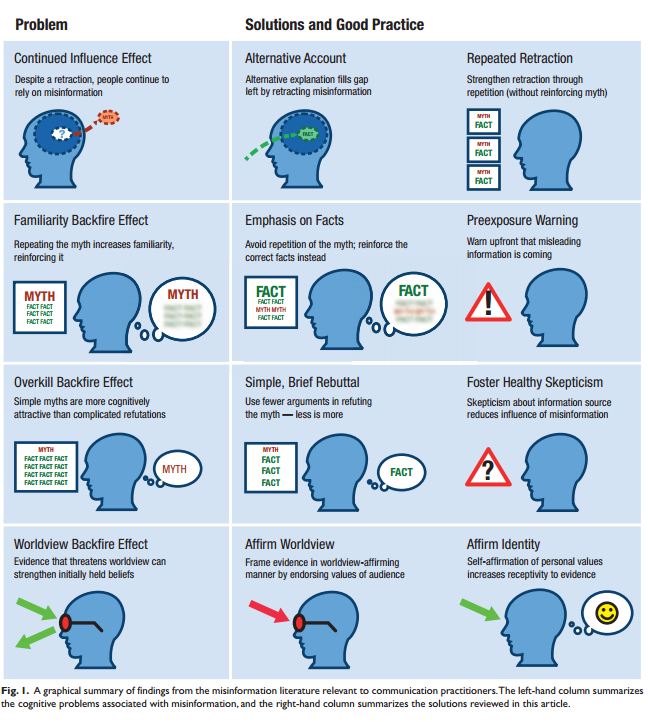

The article discusses the main sources of misinformation in our societies and the cognitive mechanisms that may be responsible for its resilience in our minds, even when we are exposed to retractions. The authors also offer solutions to the problem that may help researchers, journalists and practitioners of various kinds to find the right packaging of counter-messages that challenge previously acquired beliefs.

The article discusses the main sources of misinformation in our societies and the cognitive mechanisms that may be responsible for its resilience in our minds, even when we are exposed to retractions. The authors also offer solutions to the problem that may help researchers, journalists and practitioners of various kinds to find the right packaging of counter-messages that challenge previously acquired beliefs.

Misinformation is not ignorance: it is worse. When you are merely ignorant about something you are not opinionated. People who do not know anything about a subject often come out with simple heuristics (as argued in particular in a 2002 paper by Goldstein & Gigerenzer) that help them make the right guesses, whereas when you have been misinformed and have made the effort of acquiring the false belief about, say, the potential harm of a vaccine or Barack Obama not being eligible as President of United States because he was born in Kenya, you don’t easily let your beliefs go away without anything in exchange, because you usually made up costly justifications and narratives in order to sustain it.

Four main sources of misinformation haunt our lives: (1) rumours and fiction; (2) governments and “propaganda”; (3) vested interested of various organizations and, of course, (4) the media.

Communication then plays the major guilty role in rooting bullshit in people’s minds. This is, I would argue, because when we engage in a communicative exchange, we take a stance of trust, at least for the sake of conversation, about what it is said: we follow rules of good cognitive conduct, by presupposing that those who are communicating with us have something relevant to tell us. In most contexts, to be exposed to what other people say is enough to come up believing that what they say is true. Once we accept, even if provisionally, what others say for the simple reason that they say it, then further checking is not really elaborate (in the absence of strong reasons to think that someone wants to fool us). Our filtering strategies limit themselves to checking the compatibility with other things we hold true, the coherence of what has been said, the (apparent) credibility of the source and the possibility that other people believe the same thing.

Thus, mere exposure to malicious communicative sources can infect us with beliefs that we think we have reasons to accept because we have “legitimized” them (by telling ourselves that they come from credible sources, that they are coherent, or that there is a social consensus about them, etc.)

What is most striking about misinformation is its resistance to retraction: even if exposed to retraction, even if they have understood the reason why the information was wrong, people hesitate to change their minds. Updating information is costly. We construct narratives, mental models about a certain event that stick to our mind and that we re-enact when presented with retractions. Also, it is humiliating to be asked NOT to believe what we previously believed and usually we ask something back to change our views.

Allegory of Spring. Or is it?

Allegory of Spring. Or is it?

The last section of the paper presents strategies to correct misinformation, based on some simple principles. When people are presented with retracted information, they typically have the misinformed content repeated. It is more effective to build an alternative narrative that avoids repetition of the original bogus one. Also, the alternative narrative is the “goodie” people may like to have in exchange for giving up to their beliefs. I once remember having read a new interpretation of the allegory represented on the Italian Renaissance painting La Primavera by Sandro Botticelli. It put forward an alternative, highly structured narrative according to which the central character of the picture was not an allegory of the spring, but the muse Philosophy and the whole picture was a representation of the wedding between Philology and the god Mercury. The relevance of the alternative narrative was high enough to “erase” the previous narrative I had learned at school.

The article ends with a nice illustration on how misinformation can be corrected (see below), and some simple recommendations for practitioners.

A lot of material, as the authors suggest, needs further investigation, for example the role of emotions in spreading false beliefs, the cultural differences that may exist in accepting misinformation, and, of course, the role of social networks. Clearly, the emotional aspect and the “social network” aspect of the spreading of information are related to the attractiveness of a piece of information we may decide to spread: even if evidence about its truth is low, the potential effects we may have in other people by communicating this piece of information may be too tempting to resist spreading it. This “virological” drive of being more prone to communicate information than to consume it for our cognitive benefits is obviously a feature of our mind that needs further inquiry, especially in the Twitter/Facebook-driven information societies.

Hal Morris 18 June 2015 (05:20)

RE: 'This “virological” drive of being more prone to communicate information than to consume it for our cognitive benefits is obviously a feature of our mind that needs further inquiry, especially in the Twitter/Facebook-driven information societies.'

An interesting point. In highly opinionated online forums, I think there is often a competitive spirit to come up with the most idiotic thing some liberal icon supposedly has lately done (if the tone is anti-liberal), or otherwise to come up with some spectacular extreme, and rarely is anyone interested in fact-checking.

RE: 'In most contexts, to be exposed to what other people say is enough to come up believing that what they say is true.'

I suspect in a paleolithic condition, elaborate misinformation is just too difficult esp. in very small communities. Even in modern conditions, it takes great separation between groups of people for one group to get by with making effective use of lies.